Edge Computing Reference Architecture with Rancher and Linkerd

Edge Computing Reference Architecture with Rancher and Linkerd

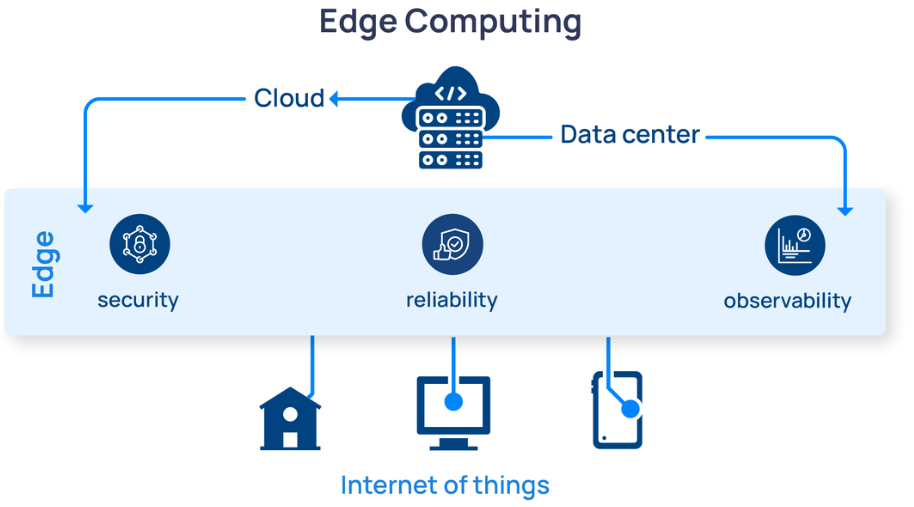

With the exponential growth of connected devices, Edge computing is becoming a game changer. Edge computing is a model that processes data near the network edge where data is generated. It addresses the issues of latency, bandwidth and data privacy more effectively than centralized cloud architectures. However, managing and orchestrating applications and services at the Edge is no easy task. Robust, lightweight and reliable tools are needed — a challenge some open source tools are prepared to tackle. By combining Rancher Prime, Buoyant’s Linkerd, RKE2 and K3s, users get a state-of-the-art, highly secure, highly performant solution for unique edge requirements.

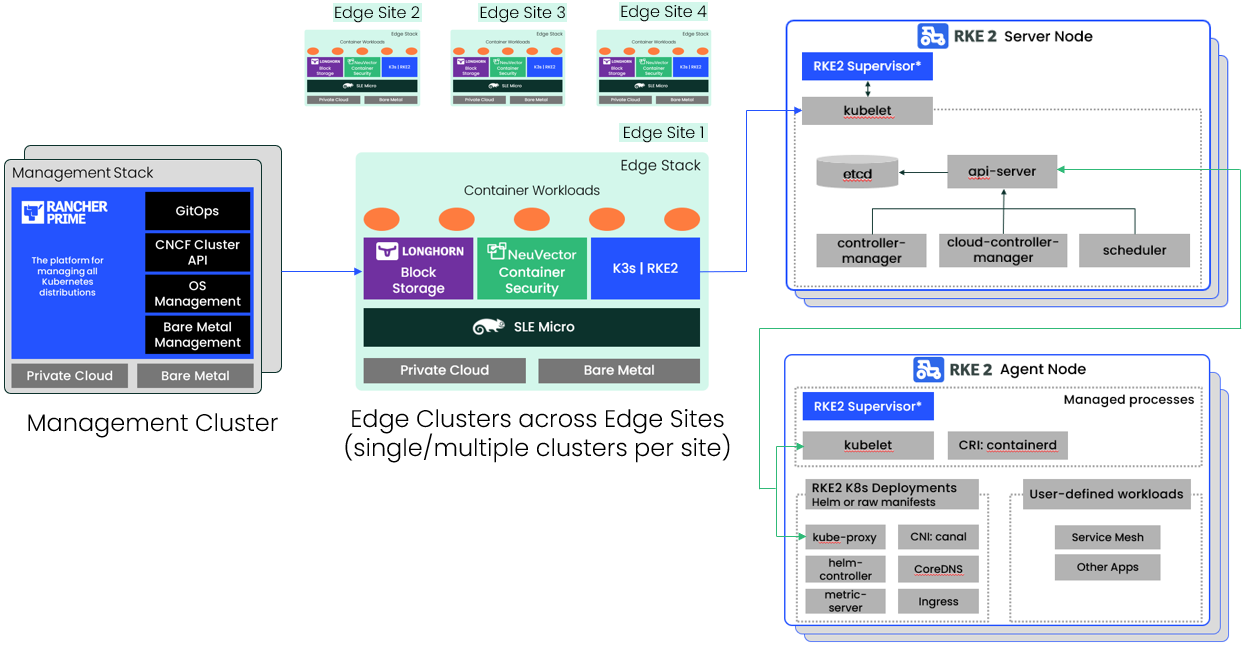

Introducing the architecture

Before we get into the “how,” let’s introduce the edge computing stack and examine why these tools work so well together for an edge computing scenario. If you are running Rancher, we recommend combining Rancher Prime, Buoyant’s Linkerd, RKE2 and K3s (for an overview of what each piece does, please refer to the table below).

| Project Name | What it is | Why for the edge? |

|---|---|---|

| Buoyant’s Linkerd | Open-source, security-first service mesh for Kubernetes | Provides security, reliability, and observability without any code changes. Is ultra-lightweight and easy to install with a small runtime footprint (this is key in edge computing where managing communication must be efficient) |

| Rancher Prime | Open-source multi-cluster Kubernetes orchestration platform | Flexible and compatible with any CNCF Kubernetes distribution, including K3s and RKE2, Rancher Prime proactively monitors cluster health and performance. |

| RKE2 | CNCF-certified Kubernetes distribution optimized for air-gapped, offline, or edge environments deployed at the core or near the edge. | Fully CNCF-certified, RKE2 improves security and simplicity of your Kubernetes deployment. It is designed to be secure, reliable, and lightweight, ideal for general-purpose computing and near-edge use cases. |

| K3s | CNCF-certified ultra-lightweight Kubernetes distribution providing the best choice for clusters running at the edge. | Ideal for edge applications, allowing for simple deployment and management while still fully CNCF-certified. It is ultra-lightweight and optimized for resource-constrained environments and functions even in remote and disconnected areas. |

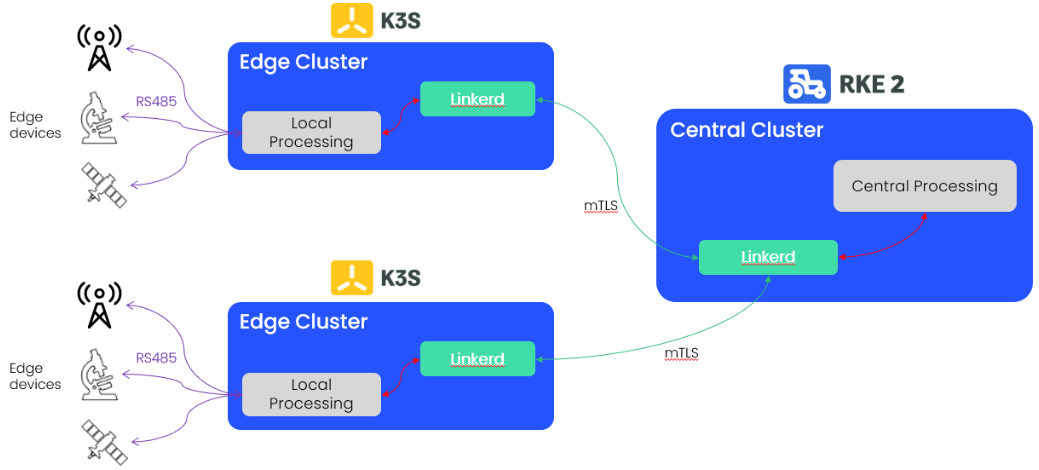

Security, reliability and observability are all critical concerns for edge computing, and it’s therefore important to choose an architecture that helps, rather than hinders, accomplishing these goals. An effective architecture will be simple to deploy and operate, using technologies in ways that play to their strengths, as described above. With Rancher and Linkerd, we can adopt an extremely simple architecture that nevertheless brings an enormous amount of functionality to the table:

Here, our instruments (on the left of the diagram) are connected to IoT “gateway” systems running Linux. By deploying k3s clusters with Linkerd all the way out on the edge gateways, we can use Linkerd’s secure multicluster capabilities to extend the secure service mesh all the way from the central cluster (shown on the right, running RKE) to the edge gateways themselves.

These tools all integrate seamlessly, providing a secure, reliable, observable edge platform that is lightweight and resource-efficient. Now, let’s explore why we believe these technologies are a perfect match for the edge.

Why Rancher and Buoyant’s Linkerd?

Seamless Integration

Reliability and Robustness

Lightweight and Resource Efficient

Comprehensive Observability

Operational Simplicity

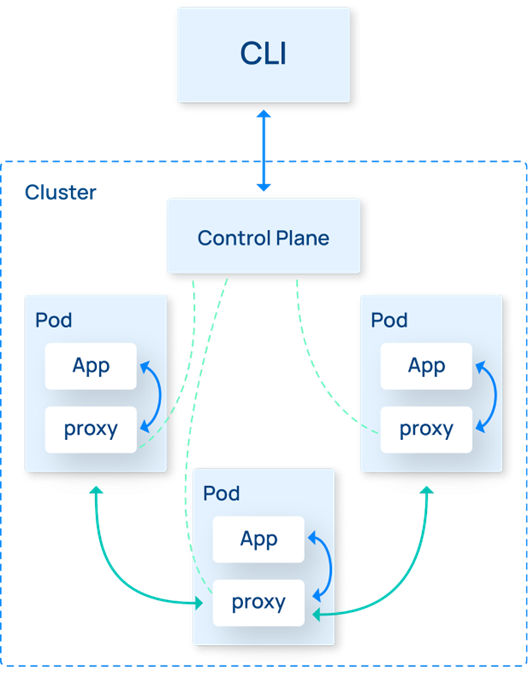

Linkerd Architecture:

Edge computing use case examples

| Industry Use Case | Retail Industry – Point of Sale (POS) Systems | Manufacturing – Predictive Maintenance | Healthcare – Remote Patient Monitoring | Transportation – Fleet Management | Summing it up |

|---|---|---|---|---|---|

| Specific challenge | In retail environments the people setting up and maintaining the physical devices are more likely to be store managers than technicians. This leads to fragile physical systems. | Predictive maintenance is often critical. Manufacturing equipment sensors send data to the central system that predicts potential equipment failures. | In remote patient monitoring scenarios, patient health data is often collected by various devices and sent to a central system for analysis and monitoring. | Modern fleet management uses real-time vehicle data for route optimization, improved fuel efficiency, and predictive maintenance. | Edge devices must process and analyze data in real-time to ensure business continuity (manufacturing, transportation), save lives (healthcare), or save money (retail). |

| Rancher | Multi-cluster management enables easy containerized app deployment and management across stores in various geo locations. | Manages edge deployments, providing a central point of control for all the clusters running on the factory floor. | Helps manage the deployment of these applications across various devices and locations, ensuring uniformity and ease of management. | Manages edge deployments across various vehicles, providing a central point of control for all the clusters. | Centralized management of distributed containerized apps on the edge. |

| Linkerd | Guarantees secure, reliable communication between store POS systems and cloud-based central inventory management systems. Provides real-time inventory updates and transaction processing. Seamlessly merges multiple clusters into a single secure mesh. | Guarantees secure, reliable communication between sensors and the applications processing the data. Seamlessly merges multiple clusters into a single secure mesh. | It guarantees secure and reliable communication between patient devices and the central health monitoring system. Seamlessly merges multiple clusters into a single secure mesh. | Guarantees secure and reliable communication between the onboard devices and the central fleet management system. Seamlessly merges multiple clusters into a single secure mesh. | Guarantees secure and reliable

communication from the edge to the central processing and analysis system. |

| RKE2 | For store backend systems, ensuring reliable and secure operation of the POS system. | Provides secure, reliable Kubernetes runtime for the central systems processing and analyzing the sensor data. | Provides secure, reliable Kubernetes runtime for central health monitoring systems, so patient data is processed accurately and securely. | Provides secure, reliable Kubernetes runtime for central fleet management systems, ensuring real-time fleet data is processed accurately and securely. | Provides secure, reliable Kubernetes runtime for the central system. |

| K3s | K3s Efficiently deploy and manage containerized apps across multiple stores. | Run data processing apps at the edge, close to data source, reducing latency and network load. | Efficiently processes data at the edge, reducing latency and ensuring timely alerts in case of any health anomalies. | Processes data at the edge, providing real-time insights and reducing network load. | Efficiently processes data at the edge. |

Edge Computing stack:

Accelerate time-to-value with the Rancher and Buoyant teams

As Edge computing use cases rapidly expand, the Rancher Prime, Linkerd, RKE2 and K3s toolkit offers a state-of-the-art, highly secure, and highly performant to these unique challenges. It provides organizations and developers with the tools and strategies they need to deliver fast, reliable performance and robust, secure communication between microservices and applications, all while efficiently managing and orchestrating Kubernetes clusters.

Practical use cases showcase how these open source tools synergize to create robust, efficient, and flexible Edge computing solutions. From Retail industry POS systems to remote patient healthcare monitoring, this stack has clear advantages. An easy integration streamlines your Edge computing implementation and enables you to process data at the Edge while ensuring reliable and secure data transfer, reducing latency and providing scalability and flexibility.

As with any implementation, there are some challenges, however. The initial setup and configuration on the edge can be complex. A deep understanding of these tools and Kubernetes is required. If you need help and want to accelerate your time-to-value, the Buoyant and SUSE teams can help. Reach out, and let’s chat!

Contact the Buoyant team and the SUSE team.

To sum it up, combining Rancher Prime, Linkerd, RKE2 and K3s delivers a robust, observable, and easy-to-manage Edge computing solution. Organizations gain a powerful set of capabilities to improve edge computing performance and tackle the complexities and challenges of managing edge environments. As edge computing applications across industries continue to proliferate, these tools will play an increasingly critical role in shaping the future of how we process, manage and utilize data in an increasingly decentralized world.

Related Articles

Jul 11th, 2024

What’s New in SLE Micro 6.0?

Apr 16th, 2024